Introduction

In the second part of this article, I will describe some diverse uses of live electronics. These various approaches can all represent noteworthy techniques, potentially to be used either separately or in combination, within a single real-time system.

The works to be covered include Cluster by Enrico Francioni which makes use of the timeline (utilising the timeinsts opcode) and delay-lines, eSpace by Enrico Francioni which is an example of a quadraphonic spatial signal, Accompanist by John ffitch which is based on a reaction to rhythmic impulses, Claire by Matt Ingalls which is based on the pitch and the randomness of signal reaction, Etude 3 which is an experiment by B McKinney based around the pvspitch opcode, and Im Rauschen, Cantabile by Luis Antunes Pena which is based on keyboard commands using the sensekey opcode.

A number of tutorials have been prepared that demonstrate many of the techniques that are described in this article. They can be downloaded as a zip archive here.

I. Description of Examples of Live Electronics Pieces that use Csound

Cluster by Enrico Francioni

My work Cluster[1], for any instrument, live electronics and fixed medium, comprises three constituent elements: the sound of the solo instrument, the reproduction of the sounds of the instrument (processed in a variety of ways), and the part for fixed medium (tape).

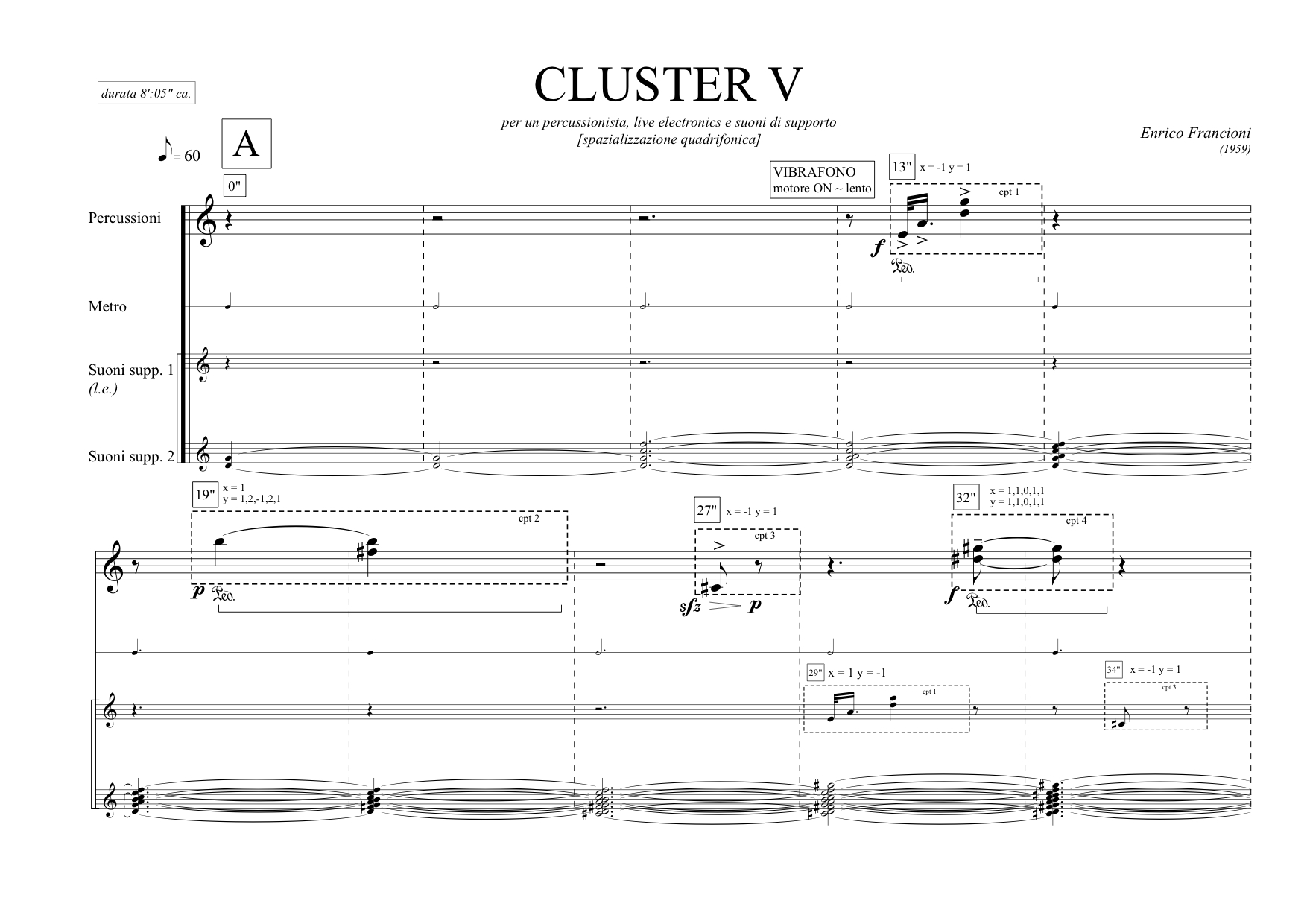

Shown below is a page of the score, in this instance for vibraphone, live electronics and prepared electronic sound.

Figure 1. Page 1 from the score for Cluster by Enrico Francioni.

The version with live electronics interests me for several reasons.

One reason is how the soloist can engage in a dialogue with the various complementary sounds through exploiting the ability of the algorithm to capture, reproduce, and delay some of their phrases. I think that within this scenario there are aspects in common with with Solo N.19 by Karlheinz Stockhausen about which I wrote in Part I of this article.

Another reason that this piece’s method interests me is that the signal path within the code is always dominated by a time-line, thereby allowing specific events from the live score to be isolated and processed in specific ways.

For this I used the k values of timeinsts. This data allowed me to implement events, as described above, simply by following conditional branches in the code.

In this example (in two channels), the selected signal enters the algorithm at a time and for a duration as defined within the Csound score (action-time and duration). The processed audio is sent to the output in real-time but is simultaneously captured and sent to the output output again with a delay (implemented using the delay opcode). Some things to note about the orchestra:

- In the first instrument we hear the original signal output processed in two channels.

- With the second instrument we will hear the original signal processed ans with a delay in two channels.

<CsoundSynthesizer>

<CsInstruments>

; header

sr = 44100

ksmps = 64

nchnls = 2

0dbfs = 1

gaRM init 0

gktinst init 0

;=================================================

instr 1 ; signal processing

;=================================================

ktinstr timeinsts

gktinstr = ktinstr

outvalue "timer", ktinstr

kin invalue "in"

if (kin == 0) then

aSIG soundin "demo.wav"

else

aSIG inch 1

endif

; SILENCE

if (ktinstr >= 0) && (ktinstr < 3) then

outvalue "capture", 0

kgaincb = 0

; SOUND + CAPTURE + FX

elseif (ktinstr >= 3) && (ktinstr < 6) then

outvalue "capture", 1

aosc oscili 1, 300, 1

aRM = aSIG*(aosc*4) ; RM

gaRM = aRM

kgaincb = 1

; SILENCE

elseif (ktinstr >= 6) && (ktinstr <= 10) then

outvalue "capture", 0

kgaincb = 0

endif

outs aRM*kgaincb*.6, aRM*kgaincb*.1

endin

;=================================================

instr 2 ; signal revived

;=================================================

adelay delay gaRM, 4

; SILENCE

if (gktinstr >= 0) && (gktinstr < 7) then

outvalue "revived", 0

kgaincb = 0

; SOUND DELAY+ REVIVED (FX)

elseif (gktinstr >= 7) && (gktinstr <= 10) then

outvalue "revived", 1

kgaincb = 1

endif

outs adelay*kgaincb*.1, adelay*kgaincb*.6 ; processed signal and delayed output (unbalanced on the right channel)

clear gaRM

endin

</CsInstruments>

<CsScore>

f1 0 1024 10 1

i1 0 10

i2 0 10

e

</CsScore>

</CsoundSynthesizer>

This piece is also interesting in terms of the spatialisation of the sound materials within a quadraphonic system. I am particularly interested in designing sound paths through the acoustic space that have a logical sense.

The creation of the fixed medium/tape part for this piece was not trivial but hearing it, it is clear that it contains material obtained, at least tonally, from what has already been heard.

The sound of the live instrument in this piece is, at various times, treated or left untreated by the software, sometimes it is relayed back in real-time and at other times delayed but it is always subjected to spatialisation.

The prepared part for fixed medium is made up of bands of sounds that form clusters and that serve as the backdrop to the other sonic components. These sounds come from a single pitch from a vibraphone (B3) and are treated, in deferred time, with spectral analysis and resynthesis. A specific example of this kind of processing in Csound is the phase vocoder.

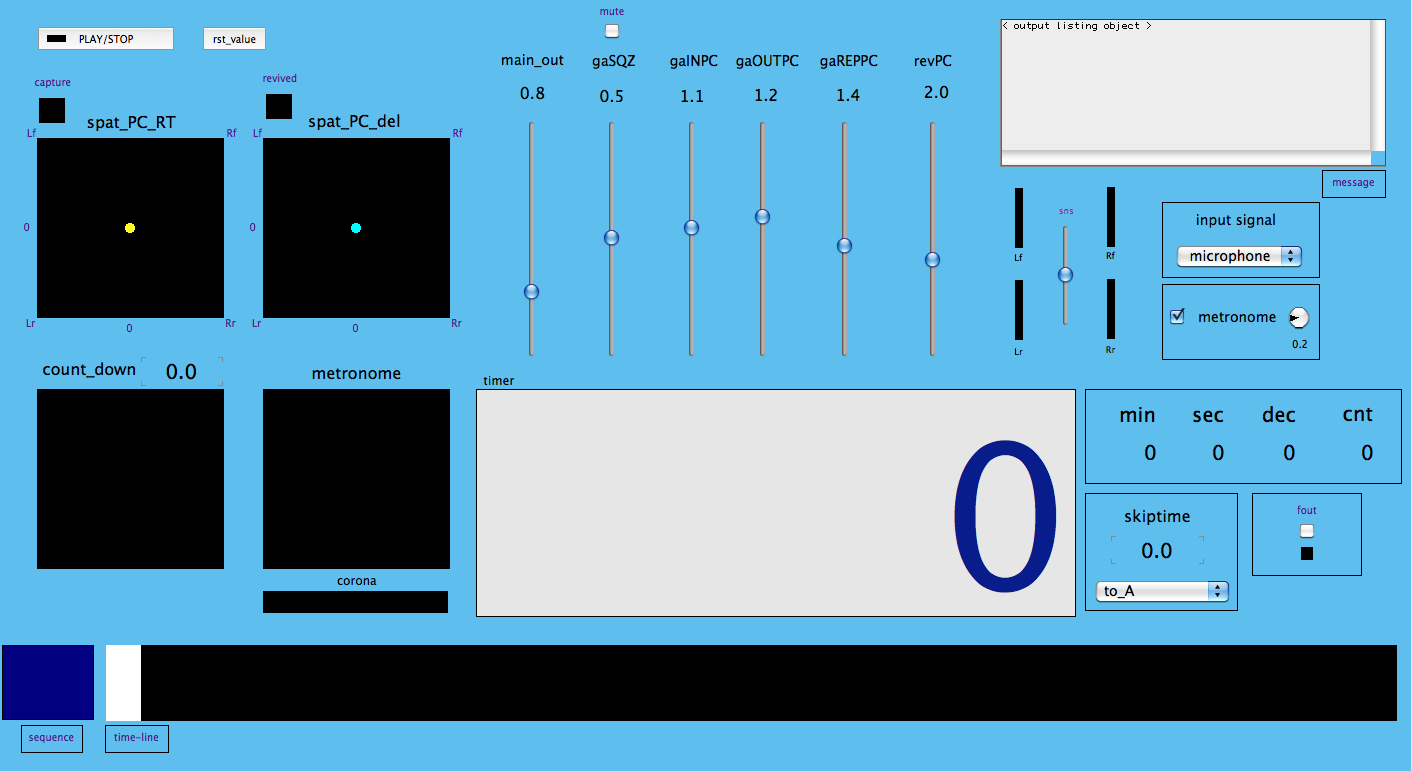

This piece is carried out as a ‘one-man performance’. This is fulfilled with the assistance of the graphical interface which is of fundamental importance. In addition to the interactive GUI controls (shown below), the timer and the time-line tools contribute to the dynamics of the electronics.

Figure 2. GUI for Cluster by Enrico Francioni.

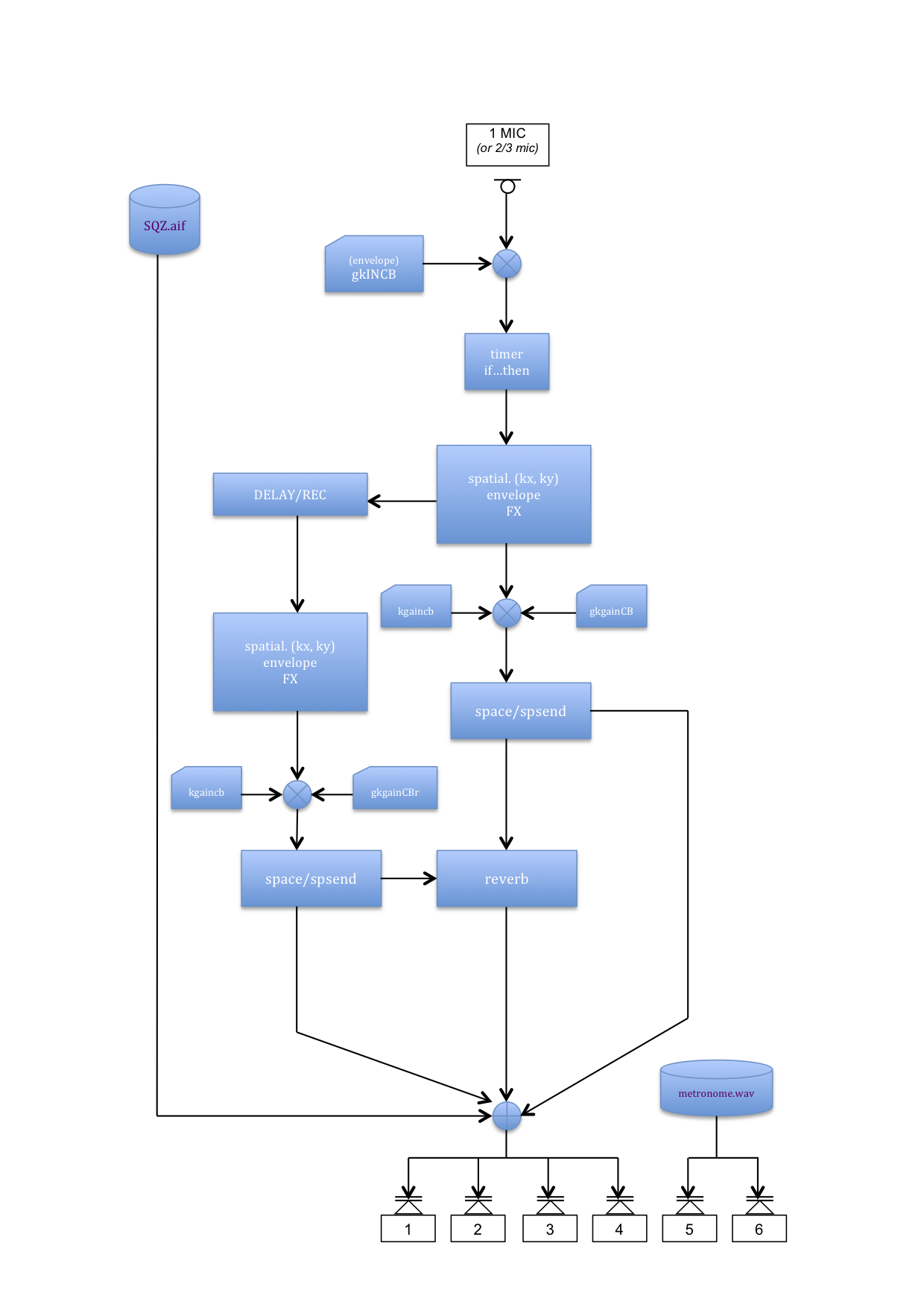

The following diagram illustrates the signal path with its treatment, the tape part and routing of the audio of a metronome.

Figure 3. Flow diagram for Cluster by Enrico Francioni.

eSpace by Enrico Francioni

I will now reflect in my article upon the use of real-time spatial movement, as used in my piece eSpace[6]. Some years ago I had the idea of adding effects to some piano pieces by the composer Henry Cowell. The proposal was to give the pieces additional interest. I was studying spatial aspects for an application in terms of location or spatial movement in a quadraphonic space. It seemed like it would be a good exercise to my teacher and myself.

My contribution would be limited to the design of the patch and to the movement of the spatialised signals during the performance of Cowell’s pieces.

Based on the knowledge I had gained I was intrigued by the subject and I undertook further work to create a control system based around an efficient graphical interface. The system would have to take into account certain aspects of the signal’s movement in space such as:

- straight and transverse movements

- curvilinear movements and spiral movements

- random movements

- guided movements with an open plan

- sinusoidal ‘ping-pong’ movements

The code for this system requires the use of different instruments, each with a specific role in the routing of the signal; I would like now to describe some of them. One of the instruments is designed for directing the spatialisation in straight segments, sometimes with recursive movements.

;===========================================

instr 3 ; DIRECTIONS STRAIGHT

;===========================================

reset:

idur_seg = 36

idur = idur_seg * (1/9)

ir = 2.25 ; conversion value for the meter in the Widgets Panel

ia = -1

ib = 1

kx linseg ia, idur, ib, idur, ib, idur, ia, idur, ia, idur, ib, idur, ib, idur, ia, idur, ib, idur, ia ; segment x

ky linseg ib, idur, ib, idur, ia, idur, ia, idur, ib, idur, ia, idur, ib, idur, ia, idur, ia, idur, ib ; segment y

timout 0, idur_seg, continue

reinit reset

continue:

rireturn ; henceforth never goes out

kamp linen 1, .7, p3, 1

a1, a2, a3, a4 space gasig * gkbutton3 * kamp, 0, 0, .01, kx, ky

ar1, ar2, ar3, ar4 spsend

kx_2 = (kx + (ir * .5)) / ir ; kx conversion values for the meter

ky_2 = (ky + (ir * .5)) / ir ; ky conversion values for the meter

outvalue "kx2out", kx_2 ; export values in the meter

outvalue "ky2out", ky_2 ; export values in the meter

outvalue "kxd2out", kx ; export values in the text-value display

outvalue "kyd2out", ky ; export values in the text-value display

ga1B = ga1B + ar1

ga2B = ga2B + ar2

ga3B = ga3B + ar3

ga4B = ga4B + ar4

outq a1, a2, a3, a4

gasig = 0

endin

The variables kx and ky are responsible for shaping the motion of the signal within the quadraphonic space. The following example will show the generation of those two values.

; calculation of kx and ky kv invalue "kvers" ; import values 1/0 kvers = (kv * ir) - (ir * .5) kangolo init 3.14159 ; the initial position of the signal inc = 6.2832 incr = inc / p3 / kr * 3 ; with the value 6.2832 around it tends to be complete, with -6.2832 reversing the direction - this calculation determines the values that define each point of the circle and the multiplier kr determines the number of laps around the listener, which may also be less than 1 or fractional - all within the time duration defined by p3 kincr linseg incr, 29.5, incr * 100, 1, incr * 100, 29.5, incr * .5 ; variation of the speed of the signal in space kval linseg 1, 30, .001, 30, 1 kvel invalue "kvel" kincr1 = kincr * kvel kangolo = kangolo + (kincr1 * kvers) ; kvers determines whether the movement is clockwise or counterclockwise kxx = cos(kangolo) kyy = sin(kangolo) kx = kxx * kval ky = kyy * kval ; this multiplication is used to activate a spiral movement (the signal goes to the origin and then returns outside)

Shown below is the code for free movement by GUI joystick.

kx_in invalue "kx1in" ; import from the graphical interface of the value of k x ky_in invalue "ky1in" ; import from the graphical interface of the value of k x kx port kx_in, .2 ; smoothing ky port ky_in, .2 ; smoothing

Below is a fragment of code showing random signal location in additional instruments.

kcpsx linseg .1, 30, .7, 30, .1 kcpsy linseg .2, 30, .1, 30, .3 kamp_x oscili 1, kcpsx, 2 kamp_y oscili 1, kcpsy, 2 kcps_x line .1, 60, 1 kcps_y line .1, 60, 1 kx randh kamp_x, kcps_x ky randh kamp_y, kcps_y

In the code and widgets panel, we have also included the following controls and instruments:

- a white-noise test to check the channels signal and the signal gain

- a switch for selecting the input signal

- a unit of reverb

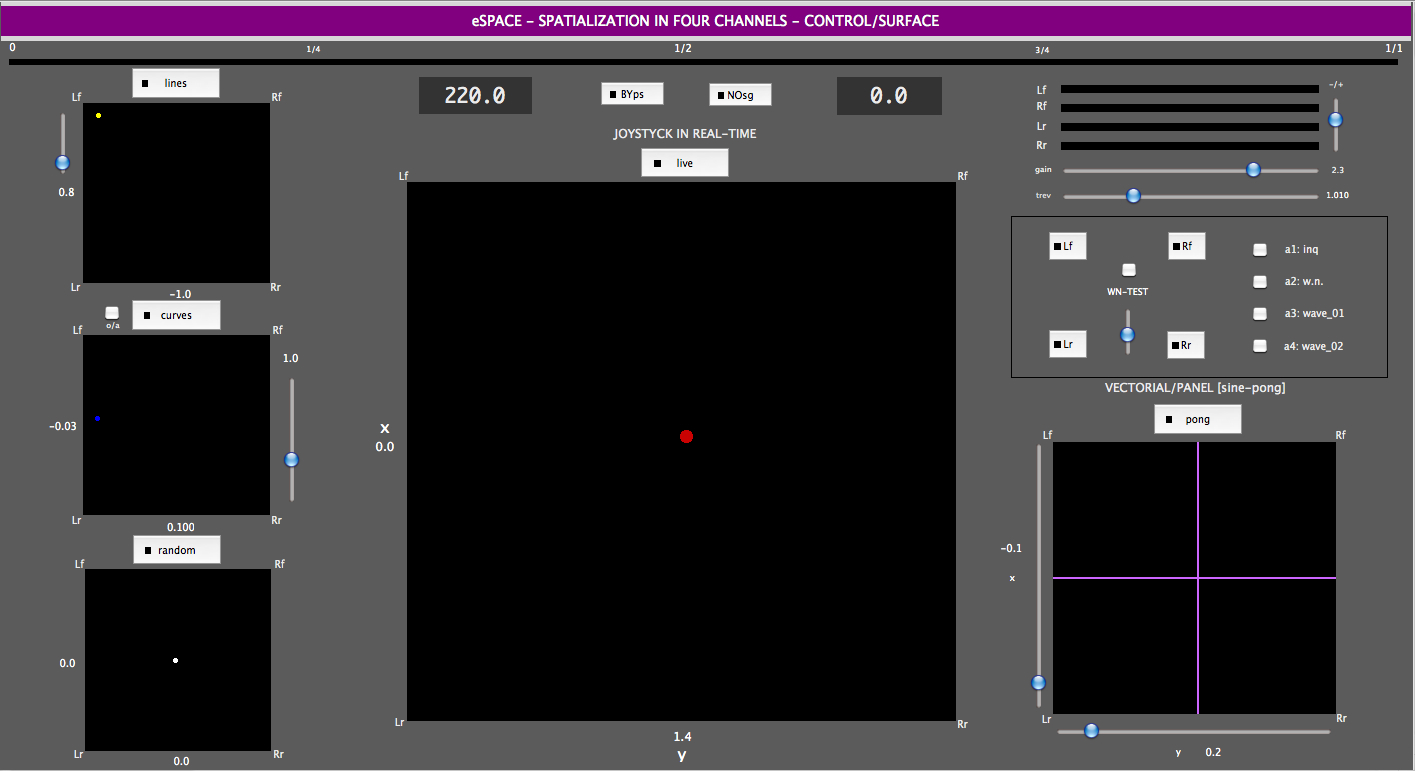

The selection of the various types of spatialization of the signal is carried out through a button exclusive to the Widget. Finally here is the interface of eSpace.

Figure 4. GUI from eSpace by Enrico Francioni.

To conclude this section of Part II of this article, I would briefly like to review some experiments carried out by other composers, who have used Csound in real-time for live electronics.

Accompanist by John Fitch

An notable example of the use of Csound for real-time interaction is the piece Accompanist by John Fitch[5]. Some time ago John sent me the csd of Accompanist; one of the key mechanisms of the algorithm is how it senses and reacts to rhythmic impulses. Here is brief description of the algorithm by Andrew Brothwell and John ffitch: "The development of a computer musical accompanist is presented, that attempts to model some of the characteristics of human musicianship, particularly concentrating on the human sense of rhythm, and the playing 'by ear' skill set. It specifically focuses on blues accompaniment, and works with no previous knowledge of the particular song that is about to be played. The performance of the lead musician is constantly monitored and reacted to throughout the song. The system is written in Csound and runs in real time." [Abstract by Andrew Brothwell and John ffitch taken from the article An Automatic Blues Band.]

In the systems that we have analyzed above, there is often a leveraging of pitch and/or amplitude RMS data to influence the behaviour of the algorithm. This patch uses the pitchamdf opcode which uses the AMDF (average magnitude difference function) method. It outputs both pitch and amplitude tracking signals. The method is quite fast and should run in realtime. This technique generally works best with monophonic signals. Contrastingly, this patch gives us the opportunity to use time, duration, and rhythm, as parameters that can define the triggering of other events. This seems to me a really interesting approach when working with a live signal input.

Inspecting the method taken in this algorithm can become a starting point for designing other interactive systems in pursuit of other musical goals than those for which it was origianlly intended, for example to create an accompaniment that is as ‘human’ as possible for a genre such as the blues. After deciding here that also it must be treated as the input signal and what it needs to be taken of the same:

The code excerpt shown below suggests how a live audio signal can be read into Csound, low pass filtered using the tone opcode to suppress high frequency transients and then scanned using the RMS opcode (root-mean-square method) to provide a measure of changing sound intensity.

ktime timek ; current time asig in ; mic input asig tone asig, 1000 ; apply a lowpass-filter krms rms asig ; the rms of current input

Amongst the other original ideas contained within this patch, are the possibility to adapt the speed of the pulses of the algorithm to those of the performer and to impose a pitch that matches the pitch of the pulses. The initial count-in also seems to me noteworthy: this is implemented by simply beating at least four pulses in front of the microphone to activate the algorithm and and to put into action an event that has been prepared. Let us now have a look at the global variables shown in the example below.

; global user configurable variables gkonsetlev init 1500 ; set depending on input level gkoutlatency init 50 ; allows for latency in pause after count in gkinlatency init 13 ; allows for latency in expected beat position for in song tempo ; global variables counting for tempo recognition gkfirstcount init 0 gklastcount init 0 gkcountnumber init 0 gktempo init 0 gktempochange init 0 gkchecktempo init 0 ; global variable for pitch gkpitch init 0 ;global variables for song position gkbar init 1 gkbeat init 1 ;global variable waiting for song end gksilence init 0

Shown below is the behavior of the code if the pulses are less than or equal to three.

if (gkcountnumber <= 3) then

; A)

; if it's the first note played start counting

if (krms > gkonsetlev && gkcountnumber == 0) then

kbeatpitch, krms pitchamdf asig, 40, 80

gkpitch = gkpitch + kbeatpitch

gkfirstcount = ktime ; ktime photograph of the first beat (gkfirstcount)

gklastcount = ktime

gkcountnumber = gkcountnumber + 1

printk 0, gkfirstcount ; debug, in samples

; B)

; if it's the second or third keep counting

elseif (krms > gkonsetlev && gkcountnumber < 3 && ktime > gklastcount+(kr/2)) then

kbeatpitch, krms pitchamdf asig, 40, 80

gkpitch = gkpitch + kbeatpitch

gklastcount = ktime

gkcountnumber = gkcountnumber + 1

printk 0, gklastcount ; debug, in samples

; C)

; if the count isn't completed within 10

elseif (gkcountnumber > 0 && ktime > gklastcount+(kr*2)) then

gkcountnumber = 0

printks "Restarting the count in",0 ; Press "Restarting the count in"

; D)

; if it's the fourth and final count then extract tempo and pitch and generate chords

elseif (krms > gkonsetlev && gkcountnumber == 3 && ktime > gklastcount+(kr/2)) then

kbeatpitch, krms pitchamdf asig, 40, 80 ; extract pitch and envelope of current input

gkpitch = (gkpitch + kbeatpitch)/4

gklastcount = ktime

gkcountnumber = gkcountnumber + 1

; calculate the average time between the intro beats

gktempo = int((gklastcount - gkfirstcount)/3)

; difference in samples: directly proportional to the speed of the pulses

printk 0, gktempo ; debug, in samples

endif

endif

Shown below is what is followed if the pulses number more than three.

if (gkcountnumber > 3) then

; if the count-in is complete and it's exactly the start of a beat to trigger the backing music

if (ktime == (gklastcount + gktempo - gkoutlatency)) then

gklastcount = ktime + gkoutlatency

gktempo = gktempo + gktempochange

gktempochange = int(gktempochange/2)

; increment the silence count

gksilence = gksilence + 1

; if a root note is being played fine tune the pitch

kpitchcheck, krms pitchamdf asig, 40, 80, 55, 0, 3

if (kpitchcheck < gkpitch*1.03 && kpitchcheck > gkpitch*.97) then

; take the average of the believed and the new

gkpitch = (kpitchcheck + gkpitch) / 2

endif

event "i", 2, 0, 1/kr ; call the instrument that generates the backing music

; ...HERE SOME CORRECTIONS OF TIME ARE MADE

; if count is complete and it's close to the start of a beat check we're in pitch and in time

elseif (ktime>=(gklastcount+gkinlatency-10) && ktime<=(gklastcount+gkinlatency+10)) then

; if we're at the start of the window and there's not already a note ringing

if (ktime==(gklastcount+gkinlatency-10) && krms>gkonsetlev) then

; stop any more checking this beat

gkchecktempo = 0

; once inside window if a note is played adjust tempo accordingly

elseif (gkchecktempo==1 && krms>gkonsetlev) then

printk 0, ktime-(gklastcount+gkinlatency)

; adjust tempo

gktempochange = gktempochange + ktime-(gklastcount+gkinlatency)

; stop any more checking this beat

gkchecktempo = 0

; once at the end of the window

elseif (ktime==(gklastcount+gkinlatency+10) && gkchecktempo==0) then

; end of check, reset gkchecktempo so it starts the check again next beat

gkchecktempo = 1

; there has been sound so reset the silence count

gksilence = 0

endif

endif

endif

If the pulses number more than three, the "Accompanist" activates the desired event, using the call shown below to help fulfill the goal of the project.

event "i", 2, 0, 1/kr

Claire by Matt Ingalls

Another very original patch that diverges from normal methods for live electronics involving a performer, microphone and computer is Claire 2.0 by Matt Ingalls.

Matt writes that:

"I should first come clean and say that the last half of the piece is a pure tape part which I really like for its practicality but this also raises some philosophical/aesthetic issues such as: what is the difference between playing a 1 sec sample or a 1 min sample? Or a 10 min tape piece for that matter? But if you are interested in the first part of the piece, I will try to explain it here. My approach comes from George Lewis - you should check out his Voyager if you haven’t already. The basic structure is fairly straight-forward: Multiple, ‘always on’, instruments are created, each one addressing a different task." [2]

For convenience in reporting the comments by Matt, I will extract certain passages of code.

i "Input" 1 999999 i "Analysis" 1 99999 i "PlayMode" 3 999999

Matt writes "Input: a ‘pitch’ opcode and logic to determine note on/offs, write to buffer

(I know I tried all the pitch-tracking opcodes and this one was the best one for

me accuracy & cpu )" [2].

This is shown below after the acquisition of the microphone signal and filtering.

asig = asig ; signal iupdte = .02 ; length of the period, in seconds, that the outputs are updated ilo = 6 ; range in which the pitch is taken, in octaves in form decimal point ihi = 12 ; range in which the pitch is taken, in octaves in form decimal point idbthresh = 20 ; amplitude, expressed in decibels, necessary to ensure that the pitch is active. He once started continues until it is 6 dB down. ifrqs = 120 ; number of division of one octave. Default is 12 and is limited to 120. iconf = 10 ; the number of structures had need of a jump in eighth. Default is 10. istrt = 8 ; start of the pitch for tracker. The default value is (ilo + l'ihi)/2. iocts = 7 ; number of decimation of eighth in the spectrum. Default is 6. gkCurPitch, gkCurAmp pitch asig, iupdte, ilo, ihi, idbthresh, ifrqs, iconf, istrt, iocts ; extraction values (pitch and amp)

Matt explains the function of the instrument input by saying:

"[it] mostly determines if a new ‘note’ has started and/or the current ‘note’ has ended. Since what we hear as one of those can either be a change in pitch or in amplitude, there is some logic needed to determine that. At the same time, I keep track of the duration of notes and the time between the last note starting and the current note starting, which I call ‘density’."

The portion of the code that determines the logic of the note-on and note-offs is very enlightening because it allows for an absolute control of the signal.

The initialization of the k variables is shown below.

kIndex init 0 ; tables index kOnPitch init 0 ; pitch when new note started kOnAmp init 0 ; amp when new note started kLastPitch init 0 ; pitch from last k-pass kAvePitch init 0 ; low-pass of pitch kLastAmp init 0 ; amp from last k-pass kNoteDur init 0 ; duration counter for currently playing note kOnCount init 0 ; time since last note on [density] kOnOnDur init 0 ; time since last note on at current note on [density] ; Determine whether we need to turn notes off or on upon every k-pass kNewOff = 0 ; default to 0 gkNewOn = 0 ; default to 0

This section of the code shows the approach for amplitude.

if (gkCurAmp == 0) then ; if no signal then...

if (kOnPitch > 0) then

kNewOff = 1

endif

else ; has input:

; special trick pause

if (gkGlobalWait > 0) then

gkGlobalWait = 0

kOnOnDur = 0

endif

endif

This section of the code shows the approach for pitch.

if (abs(gkCurPitch - kLastPitch) > 1/24) then if (kOnPitch > 0) then if (kNoteDur > kr/20) then kNewOff = 1 gkNewOn = 1 endif else gkNewOn = 1 endif endif kNoteDur = kNoteDur + 1 kLastPitch = gkCurPitch kAvePitch port gkCurPitch, .1 kLastAmp = gkCurAmp

Matt explains that "when the note ends, I write its info (pitch, dur, amp, density) to a table used in the ‘analysis’ instrument".[3]

tablew dbamp(kOnAmp), kIndex, giAmp tablew kLastPitch, kIndex, giPit tablew kNoteDur/kr, kIndex, giDur tablew kOnOnDur, kIndex, giDen

Matt continues to describe "Analysis: averages & limits of input" [2].

Below is the logic of the code to calculate averages and signal limits based on the single amplitude.

kIndex init 0

; initialize

if (kIndex == 0) then ; kIndex works from 0 to 16 - when it returns to 0 then ...

kAmpMin = 99999 ; all parameters are reset on these defaults

kAmpMax = 0

kAmpMea = 0

[omission]

endif

; for every kpass, do one item in table

kAmp table kIndex, giAmp

if (kAmp < kAmpMin) then

kAmpMin = kAmp

endif

if (kAmp > kAmpMax) then

kAmpMax = kAmp

endif

kAmpMea = kAmpMea + kAmp

[omission]

kIndex = kIndex + 1

The variables that follow are photographed every 16 milliseconds. After the condition of the loop below is satisfied, gk values are then passed to the instrument PlayMode.

if (kIndex == giTableSize) then ; if kIndex = 16 then ...

gkAmpMin = kAmpMin ; minimum value (amp) of the random tool PlayMode

gkAmpMax = kAmpMax ; maximum value (amp) of the random tool PlayMode

gkAmpMea = kAmpMea/giTableSize ; average value in an event in PlayMode (p4 of an event)

[omission]

kIndex = 0 ; ...kIndex reset

endif

endin

Matt concludes writing about Claire 2.0 that:

"+ Play: spawns ‘events’ (for an instrument playing pre-sampled sounds from my own playing) and has different ‘modes’ of playing based on analysis and lots of random numbers. I add more ‘play’ instruments as the piece progresses.

Then there are a couple gimmicks - silence, long tones, and a tape part that is the last half of the piece!

One thing that I came away with after doing this piece was that if I wanted to do this sort of thing again, I would strip out all the logic/algorithmic stuff and do it in C – a visual debugger and being able to step through code, set breakpoints, and view current variable values makes life so much easier it is worth the extra hassle of hosting a CsoundLib or a separate application sending MIDI messages to Csound or whatever. (Or just not even use Csound?)." [2]

Etude3 by Bruce McKinney

I will now consider Etude3 by Bruce McKinney[7]. He sent me his .csd file accompanied by explanatory notes on the intentions of the piece. Here is a description of the process from its program note:

"Etude No.3 is one of a set of studies for the trumpet and a computer. The computer program is interactive in that the performance of the trumpeter influences the pitches and rhythms produced by the computer. The language of the computer program is Csound, one of several computer-music languages which have been under develdopment since the 1960s. The sounds which appear in this Etude include altered (processed) versions of the trumpeter’s timbre as well as new synthesized sounds produced by the computer but influenced by the way in which the trumpeter plays his part."

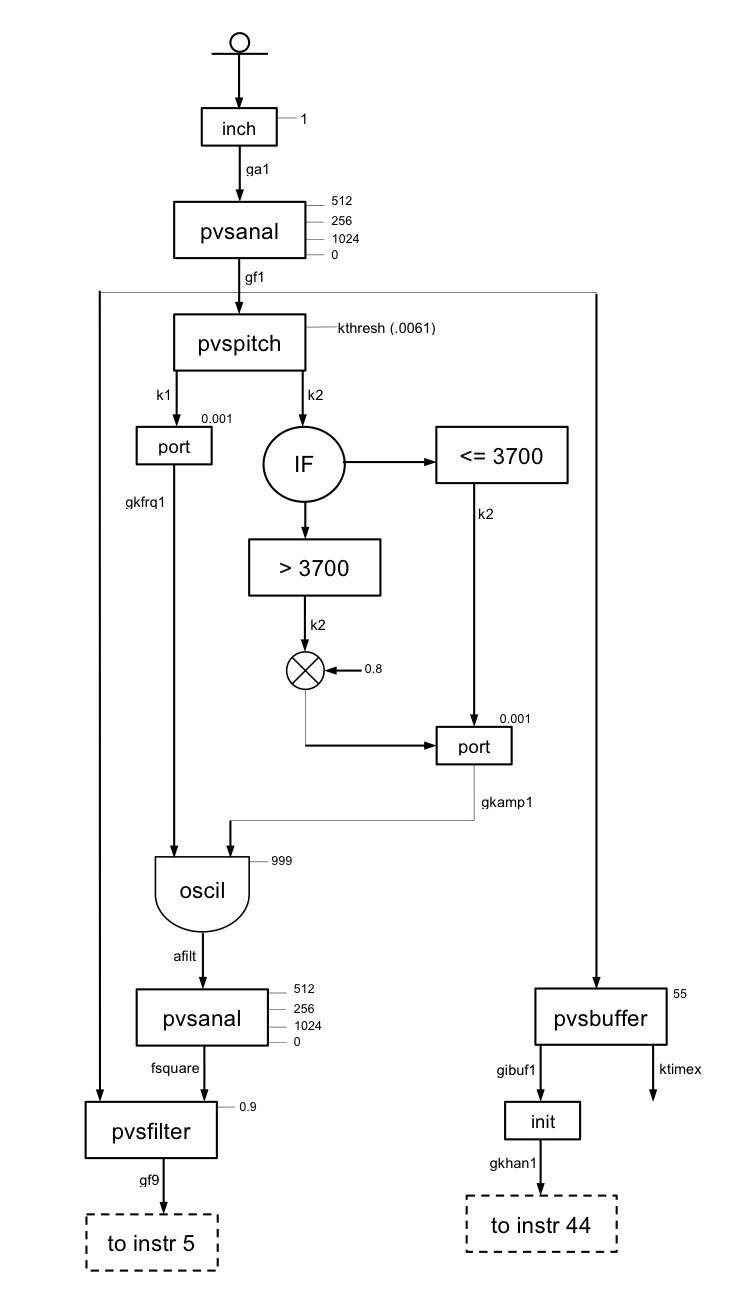

The author wants to leave the interpreter free of sliders, knobs, buttons, MIDI systems and so on and to make the algorithm react only to the sound of a musical instrument, in his case the trumpet. Shown below is a synthesis of the entire algorithm for Etude3 by Bruce McKinney.

The first part shown is where McKinney reads in the signal and performs an analysis on it with code utilizing the pvspitch opcode.

Figure 5. Flow chart from from Etude3.

- Clarinet-like instrument 1 and 44 input from mic to computer: A/D conversion. Create a phase vocoder (PV) analysis.

- pv pitch/volume track the sound at control rate.

- Put pv analysis into a buffer for use as "trumpet-like" delayed sound. Buffer turns over every 55 seconds.

- Compare input pv analysis with pv analysis of a square wave (clarinet-like). PV filter the input analysis so that only clarinet-like components are let through. This is the basis of the Clarinet-like instrument.

- Controller Instrument

- controls the turning off of instrument 44 and controls instrument 23.

- WAVE-SHAPER INSTRUMENT, instrument 23: NOT SOONER than every 0.7 seconds and ONLY IF the trumpet is playing

- Sample the trumpet pitch from the pitch tracker.

- Randomly choose one of 5 sets of pre-determined notes (defined as transpositions of the trumpet pitch) from a table. There are 1-5 notes in a set.

- Each note of the set is randomly displaced by -2 ...+2 octaves.

- Send the appropriate intervals to the "wave-shaper" instrument so that the notes play .2-.5 seconds apart.

Functions of the wave-shaper instrument: - selects amount of vibrato

- type of envelope for each note

- varies the timbre of each note

- low-pass filter at 1000 cps NB: Wave-shapers have been around for a long time. This is a very efficient way to synthesize an interesting sounding timbre that is "wind-instrument" like.

- Trumpet-like delayed sound, instrument 5 - ONLY AFTER 30 seconds AND ONLY until a key is pressed on the keyboard (near the end of the piece).

- read a random amount of the buffer containing the input sound (1-5 seconds).

- randomly transpose the pv stream.

- randomly speed up/show down the reading (faster/slower note play).

- randomly change the timbre of the sound by including more/fewer pv bins and/or starting with a higher bin and/or including only every 3rd (4th or 5th) bin.

Finally, there is A/D conversion, reverb, and output.

Im Rauschen, cantabile by Luís Antunes Pena

The last piece I want to describe, Im Rauschen, cantabile, is for double bass and electronics (2012) and was written by Luís Antunes Pena. The piece does not use the microphone for live electronics, but is controlled directly from the keyboard of the PC via the operator using sensekey or sense and MIDI commands through a musical keyboard interfaced to the system. In the interest of an execution one-man performance, I think that this strategy is a medium that adds to the possibility to the interpreter to handle more events in real-time. Obviously the interpreter might become distracted from the performance by devoting himself to giving commands at the keyboard, and that could also lead to the involvement of an assistant for the live electronics.

Pena states (in German) that:

"Im Rauschen, cantabile ist ein Delirium für Kontrabass und Elektronik basierend auf das Stück Im Rauschen Rot, ein zwanzigminutiges Stück für Kontrabass, Schlagzeugquartett und Elektronik, das 2010 von Edicson Ruiz und das Drumming Ensemble uraufgeführt wurde. Im Rauschen, cantabile beschreibt einen Zustand der ständigen Spannung. Eine organische Spannung in der die Ungewissheit eine Rolle spielt. Und Gleichzeitig ist es eine zweistimmige Komposition von Melodien perkussiver und rauschiger Natur, eine Bewegung zwischen Abstraktion und empirische Wahrnehmung […]." [4]

Below is a part of the patch dedicated to the input keys with the opcode sensekey.

kres, kkeydown sensekey

; kres = returns the number of the pressed key (if no key is pressed returns -1)

; kkeydown = returns the values 0/1/0 (every time we press the button)

gkkey = kres ; globalizes the value k of the number of the pressed key

kthreshold = .1 ; threshold

kmode = 0 ; operating mode

ktrig trigger kkeydown, kthreshold, kmode ; Informs when a krate signal crosses a threshold -

schedkwhen ktrig, 0, 1, 4, 0, 0.1

; called the instrument 4 which in turn calls the events (commands) based on the number of the pressed key

ktrigtoggle changed kres

; changed — k-rate signal change detector. This opcode outputs a trigger signal that informs when any one of its k-rate arguments has changed. Useful with valuator widgets or MIDI controllers.

schedkwhen ktrigtoggle, 0, 1, 5, 0, 0.1

; called tool 5 that if you press the + or - in turn activates other instruments for the increase/decrease of some values

Below is the list of the keyboard commands:

r ... record

p ... play original file

s ... stop

1-9 ... select samples

m ... mode forward/backward/for-and-back

+/- ... change loop duration

0 ... reset & print this info

Partial code from instrument 4 shows it will activate events according to the scheme shown below.

ikey = i(gkkey)

if (ikey == 114) then

schedwhen 1, 99, 0, -1 ; start record

elseif (ikey == 115) then

schedwhen 1, 90, 2, .1 ; stop record / play

elseif (ikey == 49) then ; sample 1

gifn = 1

prints "\n\n\n -----------> Selected Sample: "

Sample strget gifn

prints Sample

prints "\n"

[snip]

elseif ikey == 48 then

schedwhen 1, 90, 0, .1 ; 0 info

elseif ((ikey == 112) || (ikey == 32)) then

idur filelen gifn

schedwhen 1, 11, 0, idur ; p play file

[snip]

endif

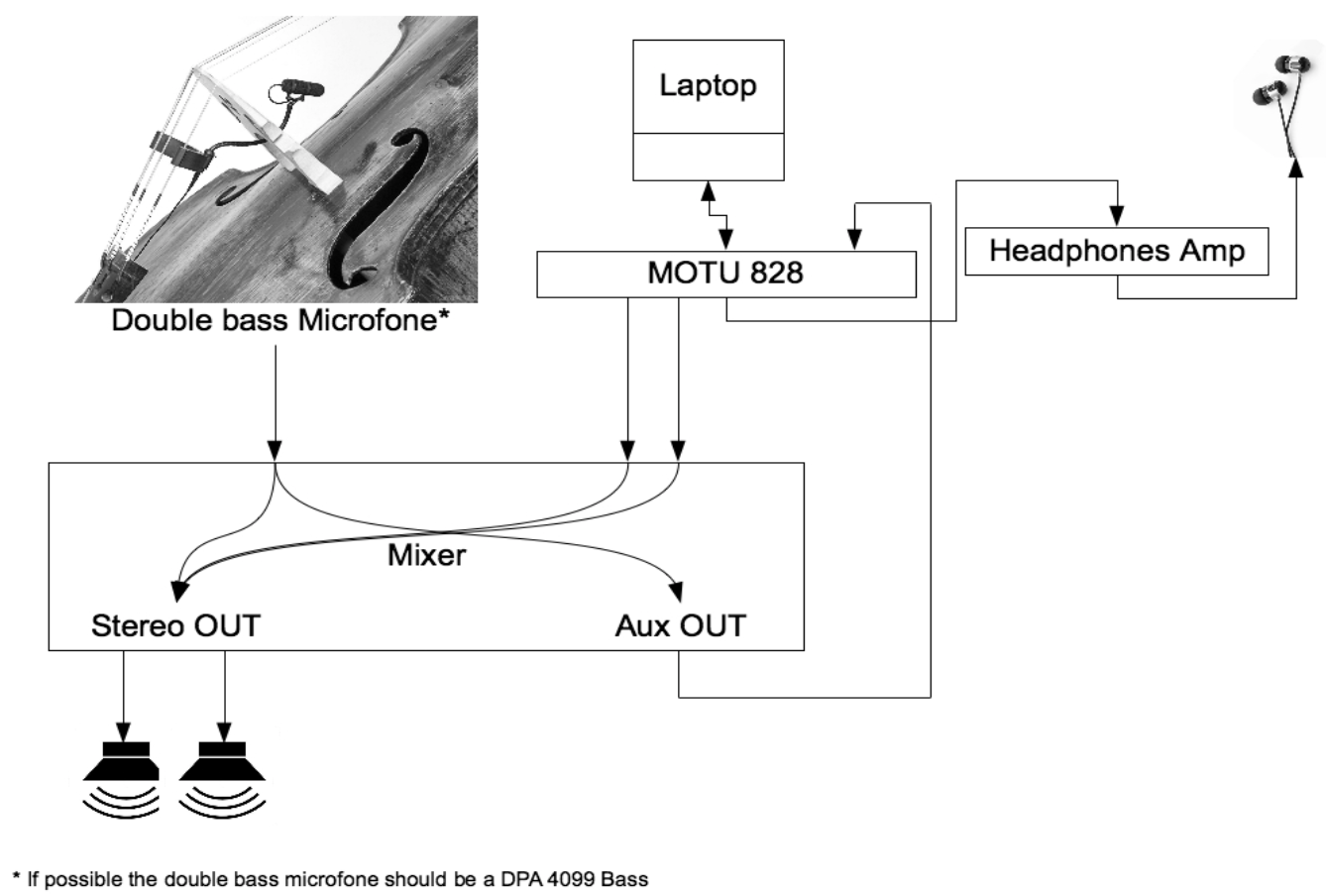

The technical equipment is described as follows in the score of the piece:

Figure 6. Schematic layout for Im Rauschen, cantabile.

The author has pointed out that "Mainly the live-patch of Csound controls the click-track and triggers the audio files". (L.A. Pena, email communication, September 4, 2015)

In some pieces there is a need for a metronome click through headphones (stereo) or through a headset (mono) for the benefit of the performer so we frequently find a greater number of channels that expected (e.g. 3, 5, 6, etc...) are necessary in the .csd. For example in the case of the previously discussed piece Cluster, the first four channels are dedicated to the sound of the piece with two further channels (5 and 6) being used for the metronome.

The main piece of Im Rauschen, cantabile is stereo so the first two channels are reserved for that with a third track being necessary for the click of the metronome.

To conclude this survey of patches dedicated to real-time processing in performance, a brief mention is made of another example Will you fizz electric down the black wire to infinity (2009) by Rory Walsh[8] which utilizes a midi controller UC-33.

Rory states within the comments of the .csd of this piece:

"This composition for piano and computer forms part of a larger work which is still under development. The piece focuses on heavily processed piano sounds which are captured in realtime by the computer. The computer then runs a sequence of different algorithms that manipulate and alter the sounds being output through the speakers. Rather than viewing this piece as a straightforward duet for piano and computer I see it more as a solo piece for computer, with the piano driving the computer’s performance. Special thanks to Orla who let me borrow a line of text from one of her emails and use it as a title for my piece."

II. Conclusion

Upon completeing this extensive survey of the use of live electronics in chamber music using Csound it is clear that the work of programming is challenging; by applying the language to the few ‘historical’ pieces as well as to other recent compositions. Csound when ised in real-time can present some significant challenges, particularly those relating to user-control can be particularly difficult. However, after a careful study of what the potential of code and opcodes available is, it quickly becomes apparent that Csound excels at performing very precise and exacting processing upon a signal. This is not just as an auxiliary element to be modified and modeled, but is a valid resource to exploit in order to dictate within the csd what ‘could be’ the actual path of the algorithm and therefore the fate of the song, that is what the composer, and for him the interpreter, wants to realize in real-time.

Acknowledgments

I would like to thank John Fitch, Eugenio Giordani, Joachim Heintz, Matt Ingalls, Iain McCurdy, Bruce McKinney, Alessandro Petrolati, Luis Antunes Pena, Vittoria Verdini, Rory Walsh for helping me in various ways on the realization of these articles.

References

[1]E. Francioni: "Francioni-CLUSTER V (demo-2ch)," (for a percussionist, live electronics and sound support). Internet: https://soundcloud.com/enrico_francioni/cluster-v-demo-2ch, [Accessed October 17, 2016].

[2]Matt Ingalls. (2011, Feb. 20). Nabble. C+C interactive computer music [Online]. Available e-mail: http://csound.1045644.n5.nabble.com/C-C-interactive-computer-music-td3328610.html

[3]Matt Ingalls. (2011, Feb. 23). Nabble. C+C interactive computer music [Online]. Available e-mail: http://csound.1045644.n5.nabble.com/C-C-interactive-computer-music-td3328610i20.html

[4]Luís Antunes Pena, 2012. "Im Rauschen, cantabile." Available: http://luisantunespena.eu/imrauschencantabile.html. [Accessed Nov. 1, 2016].

[5]Andrew Brothwell, John Ffitch, 2008. "An Automatic Blues Band", Proceedings of the 6th International Linux Audio Conference, Kunsthochscule für Medien Köln, pp. 12–17. Available: http://opus.bath.ac.uk/11394/2/13.pdf.

[6]Enrico Francioni, 2007. "eSpace", csd for real-time spatial movement, Pesaro, Italy.

[7]Bruce McKinney, 2007. "Etude3", for trumpet and computer, Cranford, NJ, USA.

[8]Rory Walsh, 2009. "Will you fizz electric down the black wire to infinity", for piano and computer.

Bibliography

J. Heintz, J. Aikin, and I. McCurdy, et. al. FLOSS Manuals, Csound. Amsterdam, Netherlands: Floss Manuals Foundation, [online document]. Available: http://www.flossmanuals.net/csound/ [Accessed May 25, 2013].

Riccardo Bianchini and Alessandro Cipriani, "Il Suono Virtuale," (Italian Edition). Roma, IT: ConTempo s.a.s., 1998.

Richard Boulanger, The Csound Book, The MIT Press-Cambridge, 2000.

Enrico Francioni, Omaggio a Stockhausen, technical set-up digitale per una performance di "Solo für Melodieninstrument mit Rückkopplung, Nr. 19", in AIMI (Associazione Informatica Musicale Italiana), "ATTI dei Colloqui di Informatica Musicale", XVII CIM Proceedings, Venezia, October 15-17, 2008 (it).

Karlheinz Stockhausen, Solo, für Melodieinstrument mit Rückkopplung. Nr. 19, , UE 14789, Universal Edition-Wien, 1969.

Essential Sitography

AIMI (Italian Association of Computer Music). Internet: http://www.aimi-musica.org/, 2015 [Accessed October 16, 2016].

A. Cabrera, et. al. "QuteCsound." Internet: http://qutecsound.sourceforge.net/index.html, [Accessed May 16, 2016].

apeSoft. Internet: www.apesoft.it, 2016 [Accessed October 16, 2016].

Boulanger Labs. cSounds.com. CSOUNDS.COM: The Csound Community, 2016 [Accessed May 16, 2016].

I. McCurdy and J. Hearon, eds. "Csound Journal". Internet: csoundjournal.com [Accessed May 16, 2016].

E. Francioni. "Csound for Cage’s Ryoanji A possible solution for the Sound System," in Csound Journal, Issue 18. Internet: http://www.csoundjournal.com/issue18/francioni.html , August 3, 2013 [Accessed October 17, 2016].

E. Francioni. "Cage-Ryoanji_(stereo_version)_[selection]," (on SoundCloud). Internet: https://soundcloud.com/enrico_francioni/cage-ryoanji-stereo_version, [Accessed October 17, 2016].

E. Francioni. "Stockhausen-SOLO_[Nr.19]_für_Melodieninstrument_mit_Rückkopplung," (Version I). Internet: https://soundcloud.com/enrico_francioni/kstockhausen-solo_nr19_fur_melodieninstrument_mit_ruckkopplung, [Accessed October 17, 2016].

E Francioni. "SOLO_MV_10.1 Solo Multiversion for Stockhausen’s Solo [N.19]," in Csound Journal, Issue 13. Internet: http://www.csoundjournal.com/issue13/solo_mv_10_1.html , May 23, 2010 [Accessed October 17, 2016].

iTunes. "SOLO [Nr.19]," by apeSoft. Internet: https://itunes.apple.com/us/app/solo-nr.19/id884781236?mt=8, [Accessed October 17, 2016].

J. Heintz. "Live Csound, Using Csound as a Real-time Application in Pd and CsoundQt." in Csound Journal, Issue 17. Internet: http://www.csounds.com/journal/issue17/heintz.html , Nov. 10, 2012 [Accessed October 17, 2016].

Biography

Enrico Francioni graduated in Electronic Music and double-bass at the Rossini-Pesaro.

His works are carried out to Oeuvre-Ouverte, Cinque Giornate per la Nuova Musica, FrammentAzioni, CIM, EMUfest, VoxNovus, ICMC, Bass2010, Acusmatiq, etc.

Enrico Francioni graduated in Electronic Music and double-bass at the Rossini-Pesaro.

His works are carried out to Oeuvre-Ouverte, Cinque Giornate per la Nuova Musica, FrammentAzioni, CIM, EMUfest, VoxNovus, ICMC, Bass2010, Acusmatiq, etc.

He performed the world premiere of the Suite I F.Grillo. From author and soloist was awarded in national and international competitions. He has recorded for Dynamic, Agora, Orfeo and others. He is dedicated to teaching and has taught double-bass at the Rossini-Pesaro.

email: francioni61021 AT libero dot it